Did artificial intelligence shape the 2024 US election?

Days after New Hampshire voters received a robocall with an artificially generated voice that resembled President Joe Biden’s, the Federal Communications Commission banned the use of AI-generated voices in robocalls.

It was a flashpoint. The 2024 United States election would be the first to unfold amid wide public access to AI generators, which let people create images, audio and video – some for nefarious purposes.

Institutions rushed to limit AI-enabled misdeeds.

Sixteen states enacted legislation around AI’s use in elections and campaigns; many of these states required disclaimers in synthetic media published close to an election. The Election Assistance Commission, a federal agency supporting election administrators, published an “AI toolkit” with tips election officials could use to communicate about elections in an age of fabricated information. States published their own pages to help voters identify AI-generated content.

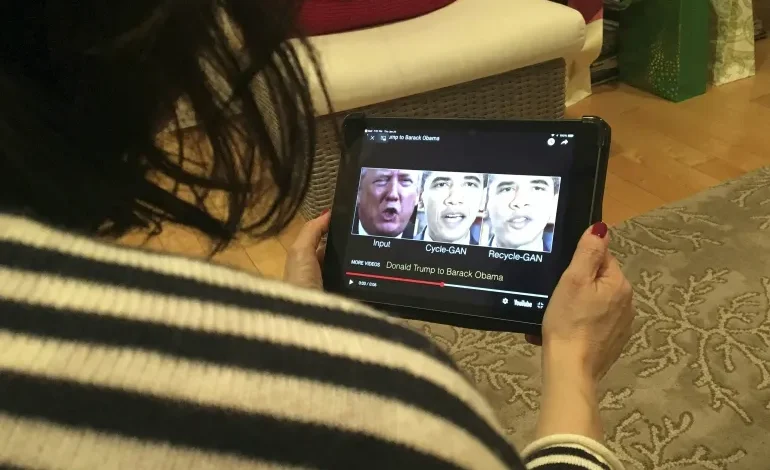

Experts warned about AI’s potential to create deepfakes that made candidates appear to say or do things that they didn’t. The experts said AI’s influence could hurt the US both domestically – misleading voters, affecting their decision-making or deterring them from voting – and abroad, benefitting foreign adversaries.

Institutions rushed to limit AI-enabled misdeeds.

Sixteen states enacted legislation around AI’s use in elections and campaigns; many of these states required disclaimers in synthetic media published close to an election. The Election Assistance Commission, a federal agency supporting election administrators, published an “AI toolkit” with tips election officials could use to communicate about elections in an age of fabricated information. States published their own pages to help voters identify AI-generated content.

Experts warned about AI’s potential to create deepfakes that made candidates appear to say or do things that they didn’t. The experts said AI’s influence could hurt the US both domestically – misleading voters, affecting their decision-making or deterring them from voting – and abroad, benefitting foreign adversaries.

“The use of generative AI turned out not to be necessary to mislead voters,” said Paul Barrett, deputy director of the New York University Stern Center for Business and Human Rights. “This was not ‘the AI election.’”

Daniel Schiff, assistant professor of technology policy at Purdue University, said there was no “massive eleventh-hour campaign” that misled voters about polling places and affected turnout. “This kind of misinformation was smaller in scope and unlikely to have been the determinative factor in at least the presidential election,” he said.

The AI-generated claims that got the most traction supported existing narratives rather than fabricating new claims to fool people, experts said. For example, after former President Donald Trump and his vice presidential running mate, JD Vance, falsely claimed that Haitians were eating pets in Springfield, Ohio, AI images and memes depicting animal abuse flooded the internet.

Meanwhile, technology and public policy experts said, safeguards and legislation minimised AI’s potential to create harmful political speech.

Schiff said AI’s potential election harms sparked “urgent energy” focused on finding solutions.

“I believe the significant attention by public advocates, government actors, researchers, and the general public did matter,” Schiff said.

Meta, which owns Facebook, Instagram and Threads, required advertisers to disclose AI use in any advertisements about politics or social issues. TikTok applied a mechanism to automatically label some AI-generated content. OpenAI, the company behind ChatGPT and DALL-E, banned the use of its services for political campaigns and prevented users from generating images of real people.

Misinformation actors used traditional techniques

Siwei Lyu, computer science and engineering professor at the University at Buffalo and a digital media forensics expert, said AI’s power to influence the election also faded because there were other ways to gain this influence.

“In this election, AI’s impact may appear muted because traditional formats were still more effective, and on social network-based platforms like Instagram, accounts with large followings use AI less,” said Herbert Chang, assistant professor of quantitative social science at Dartmouth College. Chang co-wrote a study that found AI-generated images “generate less virality than traditional memes,” but memes created with AI also generate virality.

Prominent people with large followings easily spread messages without needing AI-generated media. Trump, for example, repeatedly falsely said in speeches, media interviews and on social media that illegal immigrants were being brought into the US to vote even though cases of noncitizens voting are extremely rare and citizenship is required for voting in federal elections. Polling showed Trump’s repeated claim paid off: More than half of Americans in October said they were concerned about noncitizens voting in the 2024 election.

“We did witness the use of deepfakes to seemingly quite effectively stir partisan animus, helping to establish or cement certain misleading or false takes on candidates,” Daniel Schiff said.

He worked with Kaylyn Schiff, an assistant professor of political science at Purdue, and Christina Walker, a Purdue doctoral candidate, to create a database of political deepfakes.

The majority of the deepfake incidents were created as satire, the data showed. Behind that were deepfakes that intended to harm someone’s reputation. And the third most common deepfake was created for entertainment.

Deepfakes that criticized or misled people about candidates were “extensions of traditional US political narratives,” Daniel Schiff said, such as ones painting Harris as a communist or a clown, or Trump as a fascist or a criminal. Chang agreed with Daniel Schiff, saying generative AI “exacerbated existing political divides, not necessarily with the intent to mislead but through hyperbole”.

Major foreign influence operations relied on actors, not AI

Researchers warned in 2023 that AI could help foreign adversaries conduct influence operations faster and cheaper. The Foreign Malign Influence Center – which assesses foreign influence activities targeting the US – in late September said AI had not “revolutionised” those efforts.

To threaten the US elections, the centre said, foreign actors would have to overcome AI tools’ restrictions, evade detection and “strategically target and disseminate such content”.